Learning progress:

watched Unity tutorial on 3D physics, Colliders + rigidbodies

watched The Platform - very graphical but deeply metaphorical about capitalism and current situation of coronavirus on social isolation and misinformation 10/10.

watched Unity tutorial on 3D physics, Colliders + rigidbodies

watched The Platform - very graphical but deeply metaphorical about capitalism and current situation of coronavirus on social isolation and misinformation 10/10.

Office hour with Ben/Danny/Sarah

Thesis group catch up

Unity Raycasting tutorial

Figured out how to scan across image target by raycasting from

camera/screen center and convert hit point from world space to local space

Office hour with Jasmine

update ideas with Noah and Tak

tested image target position relative to ARCamera + real time audio scrubbing

configure Unity for Google Cardboard

Researched on scanning across image target in Unity

Researched DSP capabilities in Unity, audio mixing/audio effects, native audio plug-in SDK, WWISE

Developed composition systems for form II and form III

Watched SXSW live stream from Japan - New Japan Island Project

exported fbx mobius piano roll with retained textures

retrained model target with textures and correct scale

Unity animation tutorial 1 hour

Created thesis feedback collection form and sent out to list

Remodeled and printed mobius piano roll with note duration

office hour with Sarah

remodeled mobius strip piano roll with note duration

Limiting screentime is extremely helpful to boost productivity. I was consumed so much both mentally and physically by social media, and finally I was able to get back to work again. I didn’t make too much progress on thesis production but overall I got many things done and it felt good.

Revised thesis production schedule

3 hours of Blender tutorial

Set up MP Delta Mini 3D printer

Made a Wobbly animation in Blender using a simple displace modifier

Fixed a clogged nozzle and printed a cat and the wobbly model

I have been so distracted by social media and everything that’s been going on with Coronavirus. It’s really stressing me out and I’ve made zero progress since school is closed last Wednesday. After a conversation with Valentine, I have decided to follow her path of documenting life and work during Coronavirus to boost my productivity level and reflect my progress for thesis to keep myself focused.

set screen time for FB and IG to 10 min a day

came up with one system for the second form - Virtual ANS image to sound synthesis.

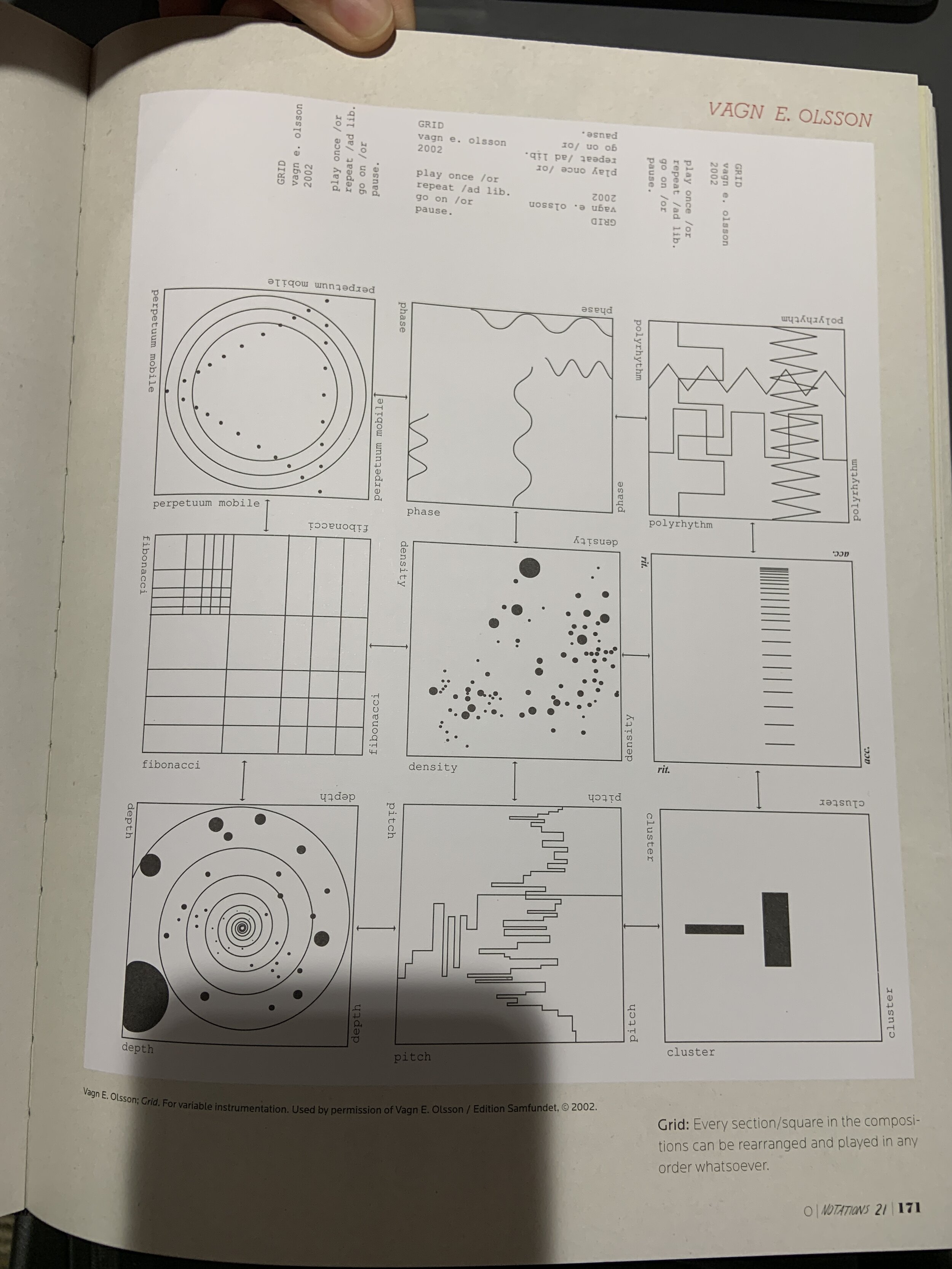

Since class 2 after I settled with my final thesis concept. I started doing more in-depth research that diverged to two parallel paths: going through and understanding different compositional methods, rules in a variety of serial music, as well as looking at different graphic scores/notation systems to draw inspiration from musical expressions in physical/geometric space. Since almost all of these graphic scores are in 2D space, I have been thinking a lot about the 3rd dimension, which would add more spatial characteristics to the original music composition as well as its visual representation, be it viewer’s physical interaction, or sculptural materials, or spatial sound.

For now, I have decided on three topics that would each be represented in my three sculptural forms.

Form I. - Musical score as topological space

Form II. - Musical timbre as physical texture

Form III. - Musical sections as modular pieces

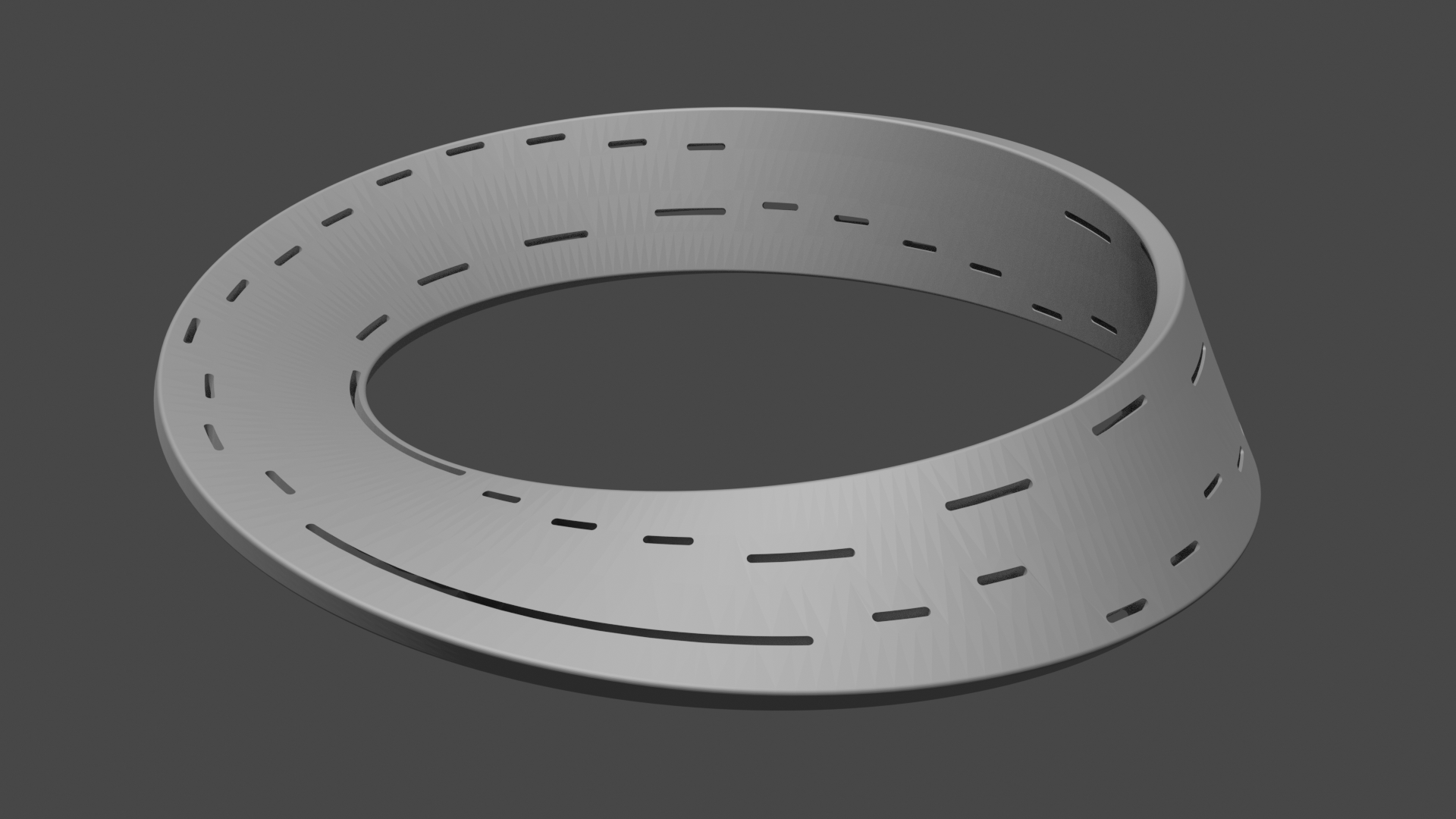

For the past two weeks I have been working on the first form. The first form is an investigation of Bach’s Canon 5 from BWV 1087. The four measures excerpt from the top two voices has glide-reflection symmetry. Any periodic text with glide-reflection symmetry can be encoded on a Möbius strip. I’m really fascinated by this property of the score and decide to represent it with a Mobius strip piano roll. I also wrote my own 4 measure music that emulates this composition method after consulting my undergraduate music theory professor, and I’m going to use my own composition for the sculpture.

excerpt from Bach’s Canon 5 from BWV 1087

I modeled different variations of the mobius strip in Blender and 3D printed them.

For the AR proportion, I used Vuforia’s Model Target Generator to train a database of the CAD model against all color background and lighting conditions for 360 recognition and tracking in Unity, but the result is still not that desirable given the uniform surface and color and partially symmetrical shape and my object having not many complicated details. I will keep experiment with the model’s color/texture/shape and scale to achieve more accuracy in detection.

I was imagining the animation in AR would have a playhead cruising along all sides(top bottom inside outside) of the mobius strip, triggering the notes when it passes them. So I used an occlusion model with a depth mask shader sitting exactly where the detected model target is, the occlusion model appears to be transparent but prevent virtual content behind the physical object to be rendered on top of it.

The second form is inspired by Arnold Schoenberg - The Five Pieces for Orchestra, Op. 16. In the Third Movement, "Farben", “harmonic and melodic motion is restricted, in order to focus attention on timbral and textural elements. Schoenberg removed all traditional motivic associations from this piece, that it is generated from a single harmony: C-G♯-B-E-A (the Farben chord), found in a number of chromatically altered derivatives), and is scored for a kaleidoscopically rotating array of instrumental colors“(Wikipedia). I thought it would be interesting to create a physical form that constitutes different materials that represent different musical timbre.

The third form I plan to explore chance operations, a composing technique favored by legend John Cage. In Solo for Piano, Cage created 62 pages in total, but players were encouraged to change the composition around. In his Music of Changes, the process of composition involved applying decisions made using the I Ching. I have also explored randomness and chance in my performance Sonic Cubes last year. I might reiterate on some aspects of that performance to include it as the third form.

Currently, I’m also talking to some very talented composers/musicians to potentially collaborate with them on the second and third form.

Since class 1, I have been discussing with a some experts and peers to gather advice and feedback on both technical production and interaction. I was discouraged to use Swift as my tool for my original multiplayer spatial audio AR app, so I decided to go with Unity which I just started learning. After a week of prototyping some simple interactions, I found out the manipulating sound in AR with multiplayer connection might be too technically ambitious, I might lose a lot of time stuck on learning the program from scratch and not being able to have enough play tests. Therefore I decided pivot my concept again.

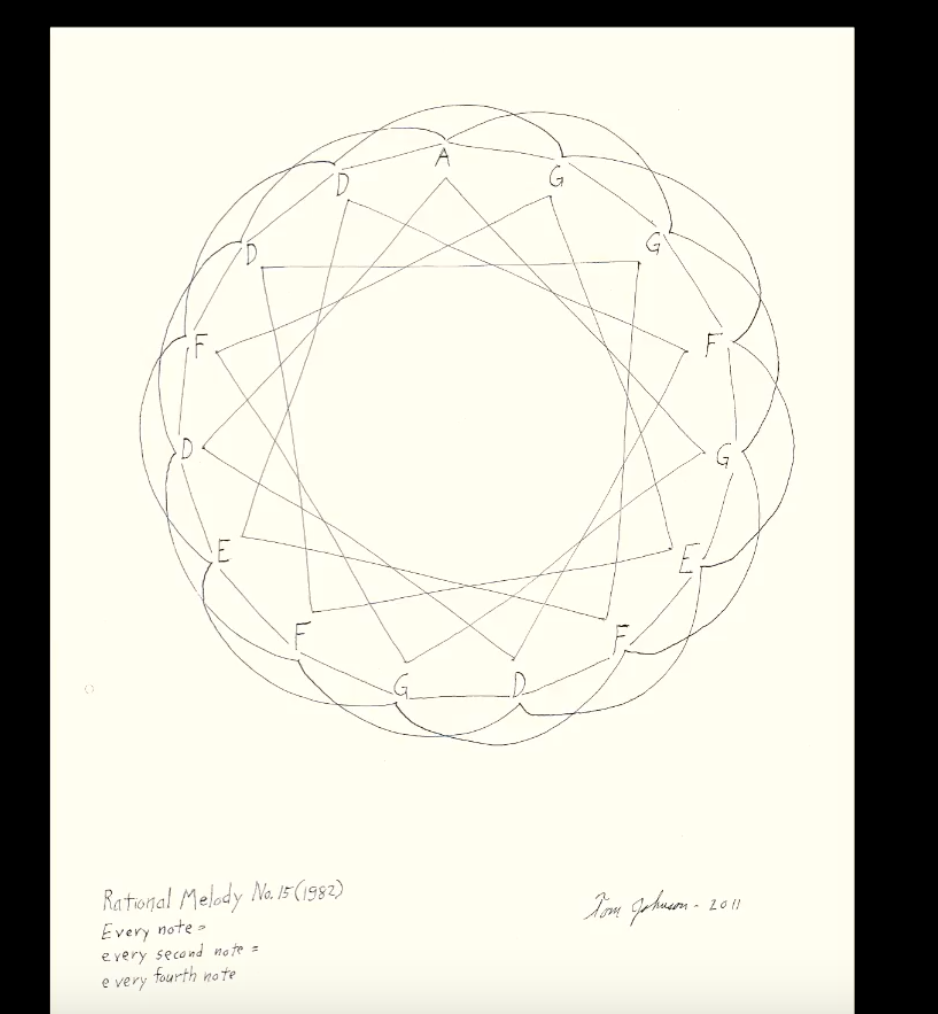

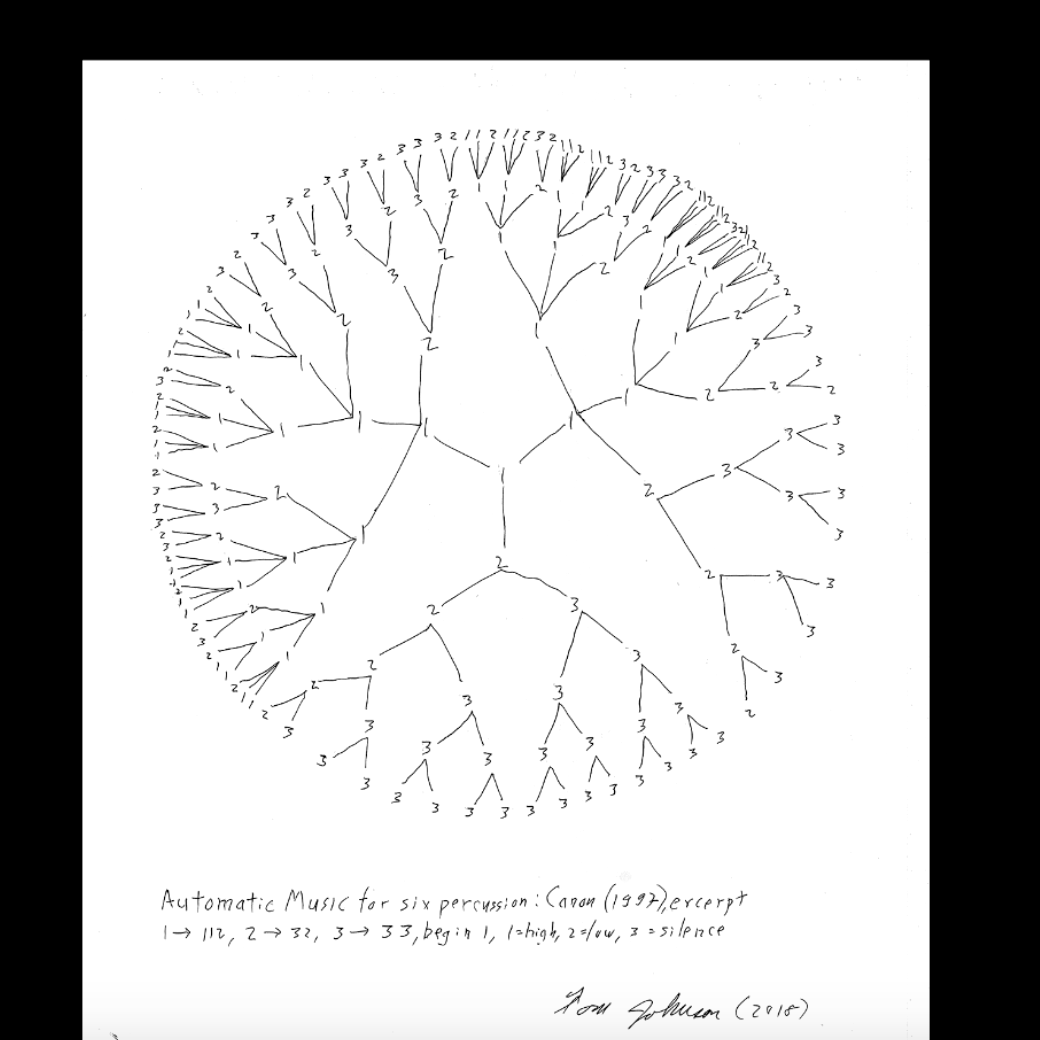

Since the beginning of last thesis semester, I have been researching on the structures and forms of music compositions and the composing techniques used by some of the most famous 20th century avant-garde musicians, and I’m very obsessed with serialism and minimalism and the idea of music can arise from a certain process. John Cage’s Music of Changes, Steve Reich’s Piano Phase and Terry Reilly’s in C are all good examples of the style. I also came across composer Mark Applebaum’s metaphysics of notation, as well as Tom Johnson’s illustrated music. I was particularly drawn to the aesthetic of graphic scores arisen from simple geometric shapes, as well as the modularity and rationality in the represented music. These forms not only generate music compositions but also visualize the music structure in a beautiful way.

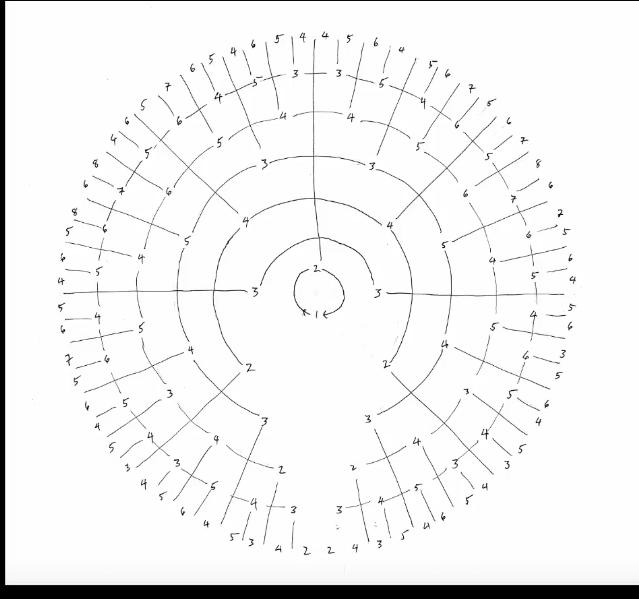

I see a lot of potential in these algorithmic forms in both music creation and visualization, and would love to create some of the forms in 3D as a sculptural piece, and using AR to detect the object and animate the process on the object as the music is playing. I started out by taking an existing composition from Tom Johnson’s Rational Melody XV, and 3D printed a form as a proof of concept. The final project will ideally have a set of 3 sculptures with part or all of the compositions/scores based on my own set of rules inspired by these musicians.

Things I want:

Participants are creating and reacting based on the actions of one another, truly collaborating and interacting with each other and paying attention to the sound in their environment.

Participants build connections with each other through the activities.

Participants understand and utilize focal and global attention

Participants walk away with better awareness of the sounds they live in

Musicians using the app as a sound practice

To have museums/galleries/educational institutions sponsor my app and bring it to a wider audience

Things I don’t want :

Participants place sounds arbitrarily

Participants disregard what others are doing, ruining the group experience

Things I’m Okay with:

Participants having fun with this activity

Kids use the app

People’s awareness of the sounds they live in

People use the app in social settings: friends gathering, team building, ice-breaking, family bonding

People keep coming back to the app after the first experience

Number of sponsors willing to sponsor my app

Number of downloads in the app store

Use of app in music education settings

Broad stroke implementation plan

An interactive sonic landscape in AR generated collectively by multiple users in real-time. Each user gets to place audio tracks anywhere in AR space in reaction to other users’ actions. The collaborative effort allows the creators as well as listeners to experience an orchestra of sounds while nestled among individual sound sources coming from all directions in virtual space.

Pauline Oliveros - Deep Listening

Global and focal attention

Attention to site - absorb and respond to sounds of environment

Observe and notice the nature of sound, and its power of release and change

Deep Listening pieces contains strategies for listening and responding. Practices like Antiphonal meditation and tuning meditation experiment with how individuals respond to the tone made by one another in the whole group by alternatively tuning and shifting their own tones. “There is no leader, just consensual activity.”

These researches contribute to my idea of inviting multiple players to the experience. It’s a group activity with individual’s decision influenced by one another’s that contribute to the whole sound sculpture

Music, Sound and Space - Transformations of Public and Private Experience

Sound-masses/shifting planes in the light of linear counterpoint,

Therefore introducing three dimensions in music: horizontal, vertical, and dynamic swelling or decreasing, as we can visualize in any sheet music

The fourth, sound projection ... [the sense] of a journey into space.

Today, with the technical means that exist and are easily adaptable, the differentiation of the various masses and different planes as well as these beams of sound could be made discernible to the listener by means of certain acoustical arrangements ...

Which inspires me with the possibility of agglomerating and mapping different sonic features like rhythms, frequencies, and intensities into a configuration that responds to changes in spatial directions.

The use of space as a compositional parameter drew attention to the fact that every listener has a unique experience of a given work depending on his or her position

Sound sculptures are often times site-specific, listeners can only experience it in museum/galleries/outdoor space. By incorporating it in AR environment, listeners get to participate first hand in the creation of an auditory experience to reconfigure any current space they are in without restrictions.

Through this experience, I also want to promote the practices of deep listening. According to Pauline:

Through practicing these strategies to absorb and respond to sounds of environment, listening can be a multidimensional phenomena. One can listen and perform simultaneously.

I have been working on a few AR projects over the past 6 months, one of them was spatial audio in AR.

Advancement in spatial audio and multiplayer capabilities in AR/VR is exciting.

User journey:

User chooses single or multiplayer mode depending on whether he’s alone or with friends.

Then chooses a scene that contains a set of pre-configured sounds.

Each user is assigned a unique color/visual marker that represents and distinguish themselves in the scene.

Once user enters AR scene, he can start place sound markers that are associated with audio clips wherever they want in the environment based on what the sound they observe from other users.

Depending on where they place the markers, the audio clip will sound different.

Each marker animates to the dynamics of its audio track.

Users walk around and experience the immersive soundscape, the intensity of each audio clip will rise and fade as user walk closer to or away from the sound marker.

Sound design:

I intend to keep the sound less musical and as minimal and abstract as possible

Some maybe more analog/environmental, some digital/synthesized

references:

Platform/tool : Swift or Unity

Define users

Number of players

Considerations in sound design

What might it look like to join the conversation? What form my contribution could take?

Imagine your project in as many ways as you can. Complete all of the following:

* 5-3-3:

a. 5 Questions that you will investigate with your thesis project

Music makes people want to dance, therefore people move their bodies following the dynamics of music, what if it’s the other way round? Can dancers be the creator instead of interpreters of music using their body as instruments?

How do dancers express themselves responding to any given environment ?

How to maximize the affordance of mobile devices to generate music with body ?

What is the relationship between body, music, and structure?

How dismantle sound controls and samples to different joints/parts of body to maximize expressivity, and balance the complexity of motion and sound.

b. 3 Possible venues for your work to be shown. Why? ( Look at artists or creators who show their work in these venues and explain why you would want to be part of these institutions or venues.)

public space

c. 3 Experts or types of people you would like to speak to about your thesis. Describe why you would want to speak to these 3 people. (They should be realistic. Not fictional or deceased people)

Different levels of dancers: Casual party dancer, amateur dancer, professional dancer - friends and classmates

Sound engineers and musicians - professors at ITP

UI/UX, graphic designers

* A model, in the style of a ”Cornell Box,” pointing to the emotional impact of your thesis. (This should be a representation of its intended impact, not a technical or procedural model.)

* Scenario/Storyboard: Written, video or graphic scenario of the experience. (e.g. A storyboard that walks through the experience for any design, UX, product thesis, time-based media project)

User goes to a park, opens the app, chooses a preset of sounds, or starts to record sounds from his/her environment, leaves rustling, birds chirping, water flowing, people talking, bike bell ringing, voice humming, clapping, and attaching the sounds to different joints/parts of an avatar body. Then user has a friend hold the phone using back camera or place phone somewhere using front camera pointing at his/her full body. User can wear wireless headphones. Then audio cue prompts user to compose music with the sound recorded using the body as instruments. User listens to the sound he/she creates with his surroundings using his body, can then save and share the experience with others.

* Persona: Who is the target user? Develop a thorough character profile of the user. (see description of what goes into a Persona).

A spontaneous dancer who enjoys dancing in any location that inspires her, even without the accompaniment of music

Musician who is constantly exploring new revenues of musical expression.

Kid who likes to move around a lot, interested in new interactions and playful experiences.

Interact with Ambient sound in the environment

Body causing turbulence around the user’s sound environment

Write/Make notes about what you’ve learned.. How do your sources relate to each other? New avenues to explore.. Or settling on one…Keep observations, ideas and names that pop up in your research diary.

Make a (physical, analog) chart with the questions you have and answers so far and bring it to class. You will share it in class, and colleagues will make suggestions

Here are some critical questions to answer on the blog:

What else is out there like it?

How is yours different?

How does it improve what exists?

What audience is it for?

What is the world/context/ market that your project lives in?

In your initial research, have you found something you didn’t expect? Is it an interesting path to follow?

What do you need to know about the content/story?

What do you need from a tech standpoint?

If it’s too much for the time.. Are there any discrete parts of it you could accomplish?

After doing first round of research on my big concept music structure and forms as well as reflecting on the similarity of projects I’ve made at ITP, I found out that my projects revolves around a common theme: music/sound and motion. Figuring out my theme is a breakthrough during my brainstorming process, it allows me to narrow down my original concept of evolving music structures to the relationship between music and structure and motion, which makes me think of the human body that generates motion but is bounded by structure.

Therefore, as my research progressed, I shifted my focus to music and body movement. Some existing projects exploring the same concept are Human Harmonic - A symphony of sound from the human body, a vinyl record that transferred medical data into a music experience of the body at work, Body Music Festival, and Body Percussion, where musicians and dancers create music by clapping, tapping different body parts. There are also countless projects using machine learning model like ml5 PoseNet or Kinect body tracking that use body as instrument to play music, examples are Above, Below and In Between, Astral Body, Body Instrument(Kinect Body Instruments), NAGUAL DANCE, Body Beat , Pose music, Posenet music, melody shapes. These projects all rely on computer, webcam/kinect and music applications like Ableton, there are certain limitations to the setup. Users have to install the computer and camera, run programs and only works in certain locations. I want to make the interaction more accessible and portable by exploring the affordance of smart phones and AR, and considering the users' surroundings as an inseparable part of the experience. It could be choreographic for dancers, or simply for fun for anyone to make music out of mundane scene. The project will live in iOS 13 with ARKit3, which enables body tracking with iPhone’s camera. In my initial research, I found an inspiring piece, The Metaphysics of Notation, a graphic score by musician Mark Applebaum, where musicians are free to interpret and improvise on what they see with their instruments, but I’m not sure yet how that would fit in to my concept. So far, I’m still exploring how to relate the structure of the body to structure of music by investigating the composition techniques of 20th century avant-garde musicians.

With new search terms: "body" AND "music" AND "structure", and "motion" AND "music" AND "structure", some relevant books I found are:

Body, sound and space in music and beyond : multimodal explorations

Sound worlds from the body to the city : listen!

Music, sound and space : transformations of public and private experience

Music, analysis, and the body : experiments, explorations, and embodiments

Sound, music and the moving-thinking body

Body and time : bodily rhythms and social synchronism in the digital media society

Gesture control of sounds in 3D space

Piano&Dancer: Interaction Between a Dancer and an Acoustic Instrument

Torrent: Integrating Embodiment, Physicalization and Musification in Music-Making

Take your idea/concept and do research to find out where it fits in the world of research and develop concept map. Look at your area… what are the terms used, who are the people, where do you find their work, what looks interesting. Record all your observations in the Research Diary. Gathering as much as possible...

Database:

Amazon, NYU libraries, Acm digital library, Youtube

What I searched:

“Avant garde” AND music, Avant garde music AND (bibliography OR sourcebook), “language” AND “music”, “noise” AND (tone OR music), Psychoacoustics, Algorithmic composition, Fluxus

Potentially good books/articles/websites you found + notes or reflection/terms/ideas:

How to Sound Like Steve Reich - ripple effects, kaleidoscope, speech melody

Is John Cage's 4'33'' music?: Prof. Julian Dodd at TEDxUniversityOfManchester - removal of composer’s ego

Inspirational Working Methods: John Cage and the I Ching - I-CHING, Oblique strategies

Transforming Noise Into Music | Jackson Jhin | TEDxUND - Predictability vs variability

Tristan Perich - Microtonal Wall at Interaccess - Walkthrough - microtonal music, demonstration of the relationship between noise and tone

The difference between hearing and listening | Pauline Oliveros | TEDxIndianapolis - expand the perception of sound, vibration, focus and expansion, psychoacoustics

Tristan Perich: 1-Bit Symphony - 1-bit music

The Geometry of Musical Rhythm: What Makes a "Good" Rhythm Good?

Music by the Numbers: From Pythagoras to Schoenberg

Perceptible processes : minimalism and the baroque

Music of the twentieth-century avant-garde a biocritical sourcebook

Musically incorrect : conversations about music at the end of the 20th century

Anthology of essays on deep listening - Deep Listening's ability to nurture creative work and promote societal change.

Deep listening : a composer's sound practice -how consciousness may be effected by profound attention to the sonic environment. ….Undergraduates with no musical training benefit from the practices and successfully engage in creative sound projects.

Boring formless nonsense experimental music and the aesthetics of failure:

Seeing sound : sound art, performance and music, 1978-2011 : Gordon Monahan

I am interested in the concept of how music is created based on structures and rules but also those boundaries are constantly being tested throughout music history. Music composition became much less restricted by rules and structures in the Romantic period than the earlier Classical and Baroque period. And later came Schonberg's twelve-tone music and minimalists' process music in the early 20th century. Avant-Garde musicians continuously challenge the definition of music and progress the way music is perceived by breaking old traditions and inventing new rules.

There is a spectrum between predictability and variability when we judge a piece of musically pleasing or not. Musicians and listeners can fall anywhere on this spectrum, which determines a musician's style and listener's tastes.