Among all the music elements we have discussed in Code of Music class up till week 6, I found the topic of Synthesis most interesting and at the meantime challenging for me. Having studied music theory and played classical piano music for a long time, I found rhythm, melody, and harmony rather old and familiar concepts. Whereas, synthesis, the technique of generating music from scratch using electronic hardware and software, was never taught in my previous theory classes. Having a little bit of knowledge on synthesis from my Intro to MIDI class in the past, I still felt a little hard to wrap my head around the code that applies all these new concepts even after I finished my synth assignment. So I decided to challenge myself and refine this assignment by focusing and experimenting further with some synth techniques. In my previous synth assignment, I drew inspiration from the Roland TB-303 bass line synthesizer and created a simple audio-visual instrument that manipulates the filter Q and filter envelope decay. TB 303 has a very distinct chirping sound which became the signature of the genre acid house and a lot of techno music. Having listened to million songs in those genres, I have grasped on the surface certain patterns in the subtle and gradual changes layer by layer. After discussing with Luisa and resident Dominic, I thought why not expand my assignment into an audio-visual techno instrument with multiple control parameters for myself and other amateur fans to play with and experience the joy of creating/manipulating our own techno track on the go.

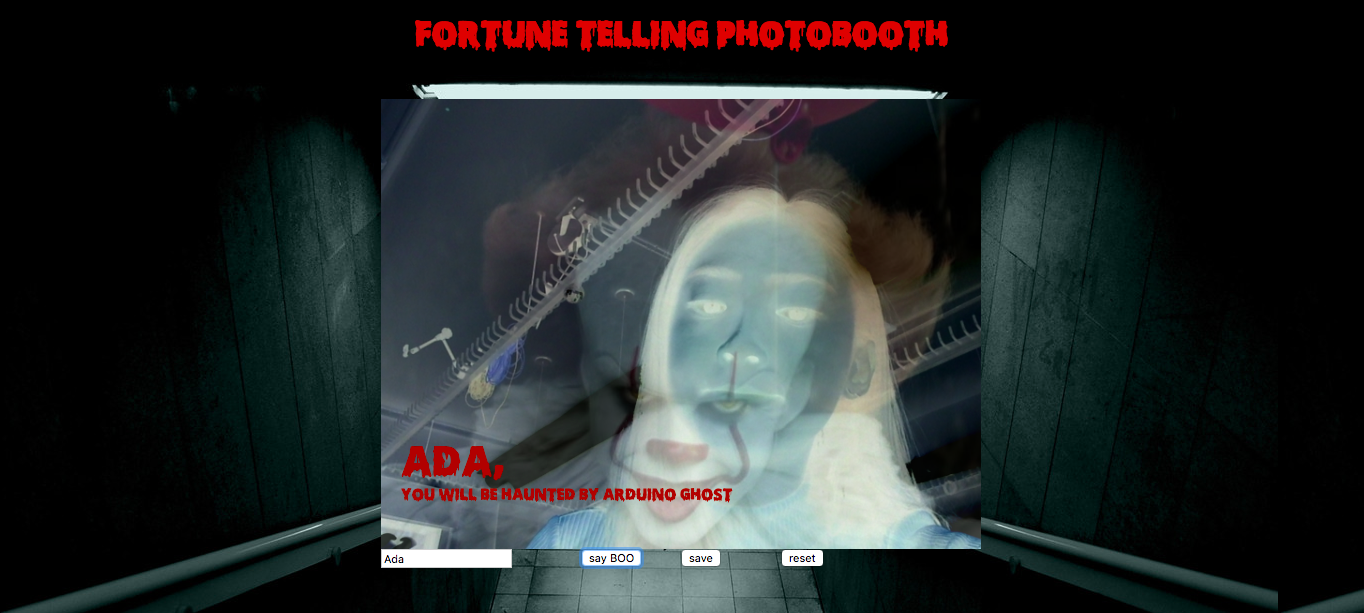

In the beginning, I was completely lost and didn't know where to go about starting this project. Nevertheless, I started experimenting with all types of synth and effects in Tone.js docs, and some ideas started to merge.

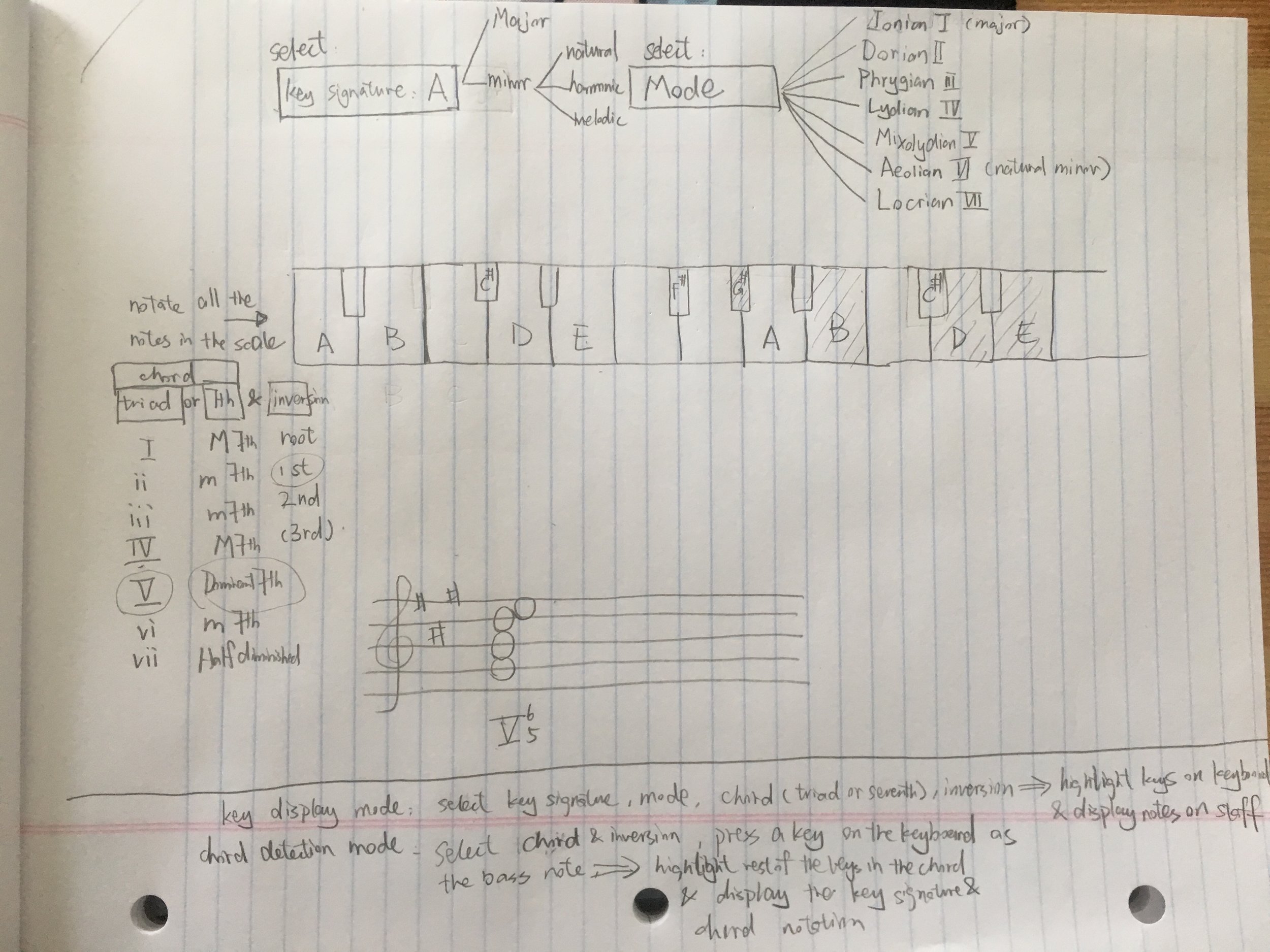

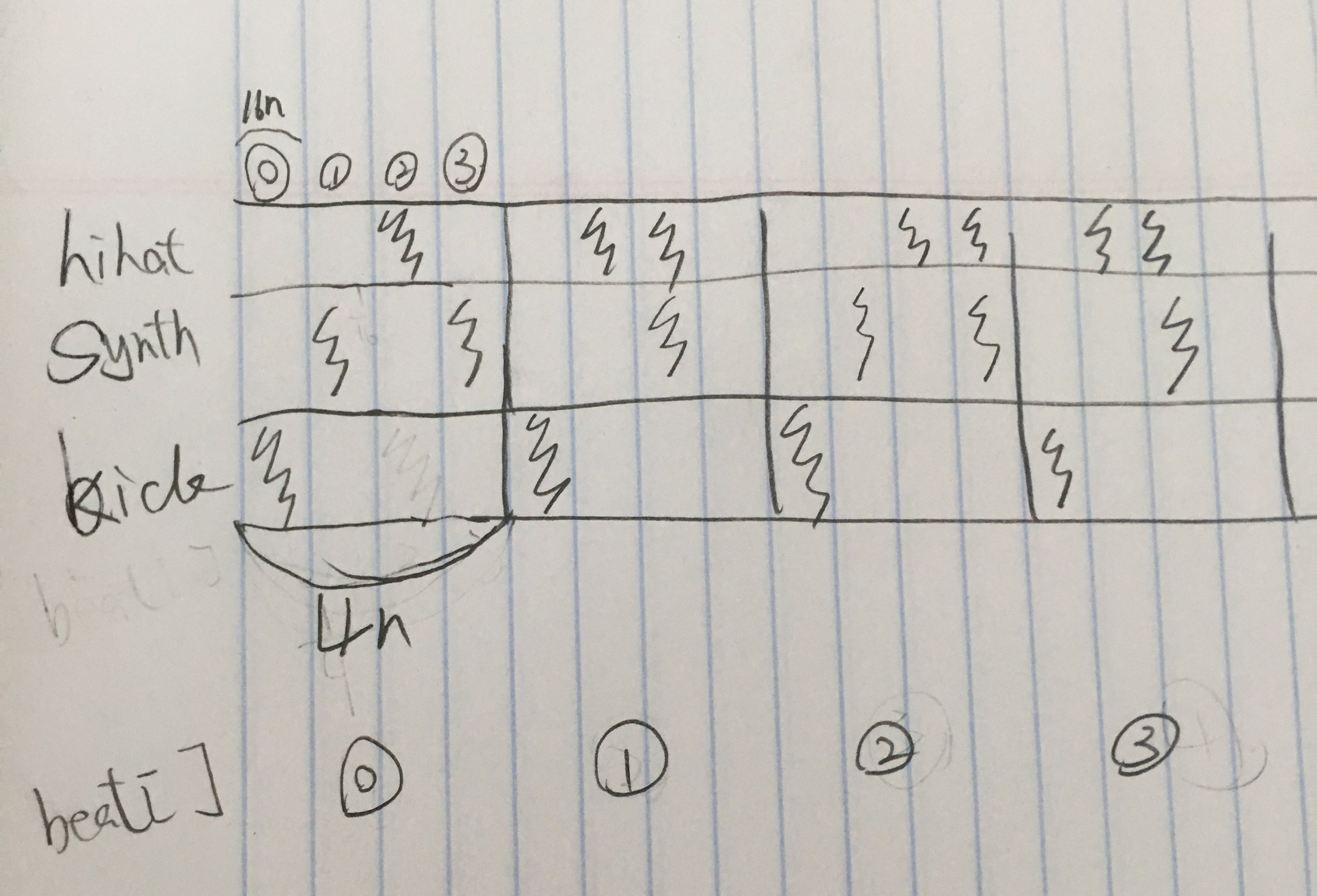

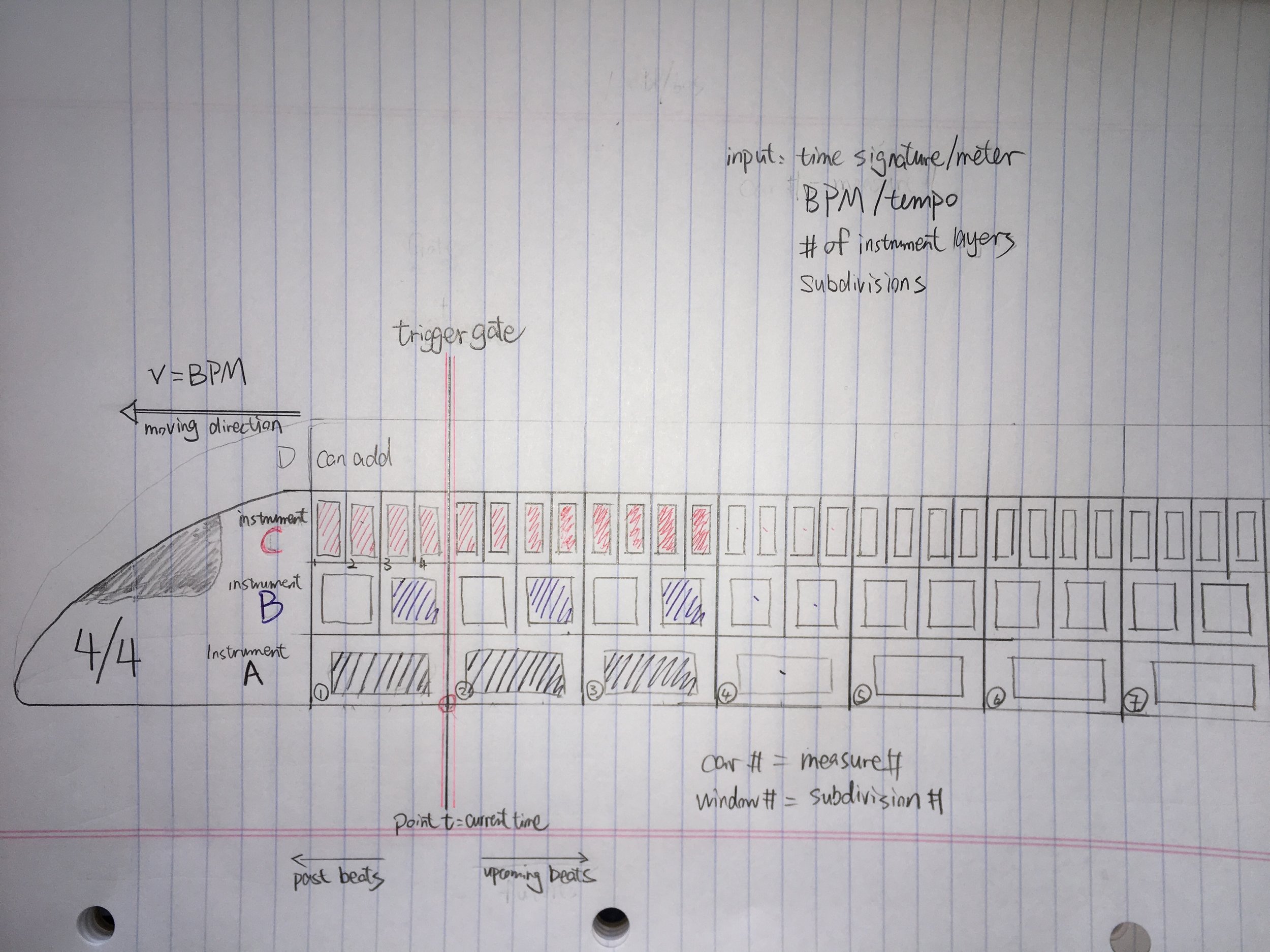

I used a MembraneSynth for kick drum and a Loop event to trigger the kick every "4n", to generate a four-to-the-floor rhythm pattern with a steady accented beat in 4/4 time where kick drum is hit on every beat (1,2,3,4). And also connected a compressor to the kick to control the volume. I asked how I could manipulate the bass without changing the synth, and I thought about a most common way when DJs mix tracks is to apply a high pass filter to swoop out the bass sound during transition. Therefore I added a high pass filter to my MembraneSynth controlled by a kick slider and also had the slider change the brightness of the entire visual by inverting the black and white color. I referred to this article to set my filter slider values. "Low end theory: EQing kicks and bass" : "put a similarly harsh high pass filter on the bass sound and set the frequency threshold at 180Hz again, so that this time you're scooping out tons of bass. "

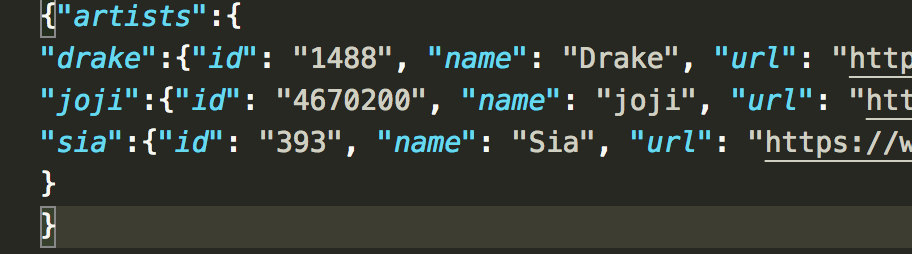

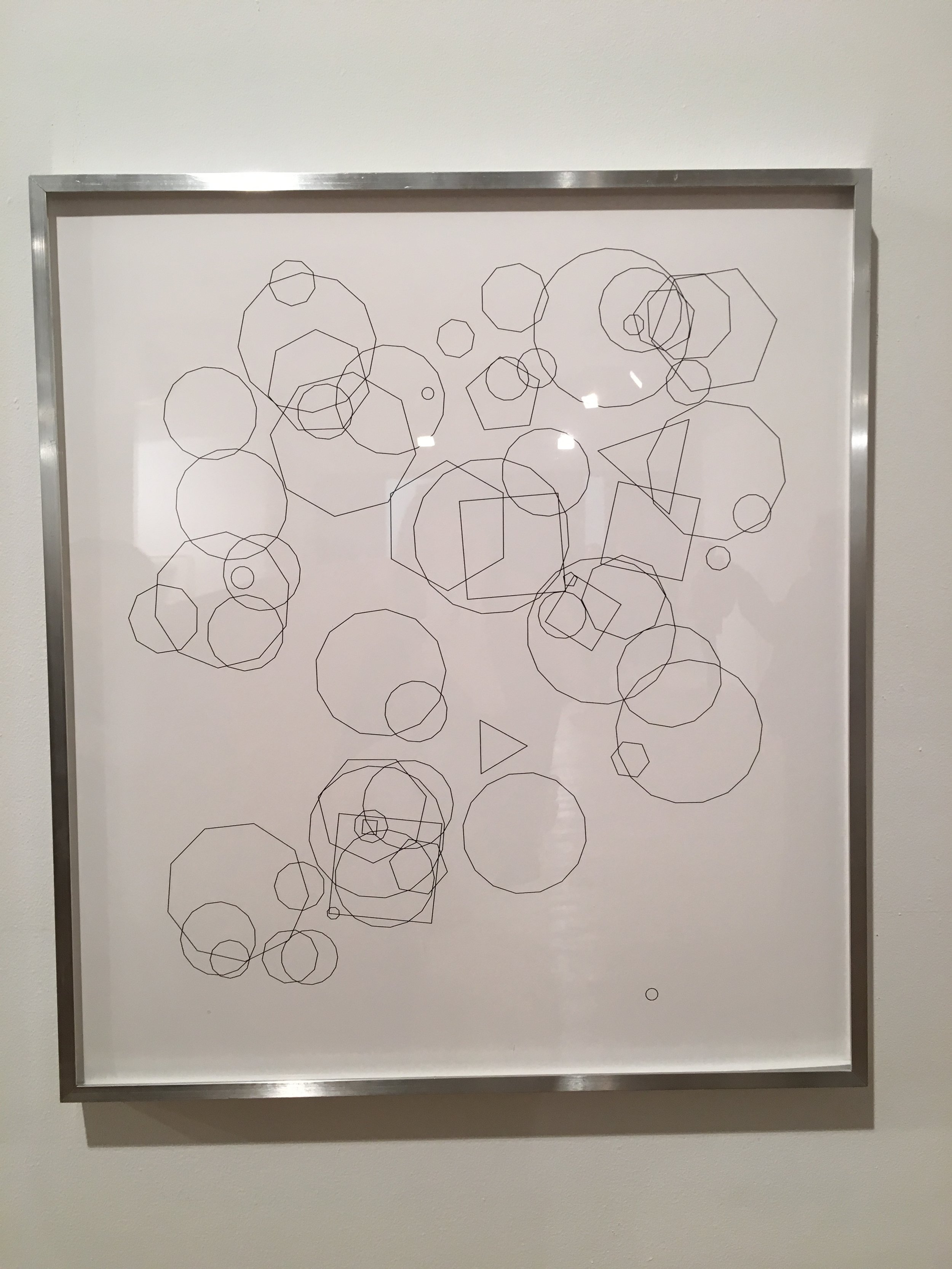

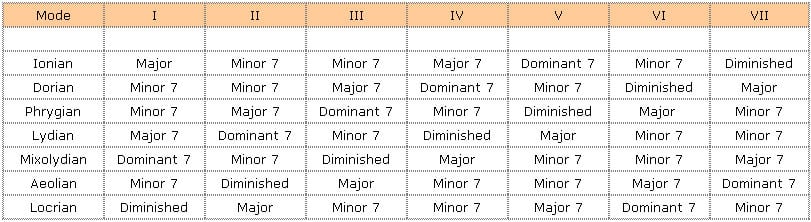

In my original assignment, I only had one fixed set of notes generated by a MonoSynth being looped over and over, which contains 4 beats of 4 16th notes in each beat(4/4 time signature). After running the code over and over during and after the assignment, the melody was stuck in my head and I started to get annoyed the same notes. I knew that if I were to create an instrument not just for myself, the main melody line needs to have more variety for user to choose from, so it can be more customized. Again, inspired by the virtual TB 303, I really enjoyed the feature of randomizing the notes in the editor, and decided to recreate the randomize function in my instrument so that if users don't like the note patch, they can always keep randomizing to generate new patches until they find one that satisfy them. Therefore, I added a randomize function tied to a randomize button, and looped the MonoSynth notes every "1m"(16 16th notes) with a Tone.Part (funtion(time, notes)) event to pass in randomly generated notes each time user click the button. I initialized 16 16th notes in timeNotes array with each item in the array contains a time and note array ["0:0:0", "Bb2"], and created another synthNotesSet array with all 13 notes in the octave C2-C3 and some 0s as rest for computer to pick from. I accessed each 16th MonoSynth notes by using synthPart.events[i].value to overwrite previously generated notes as well as print out the generated notes in the randomize function, so users can see what notes are being played.I also added a compressor to MonoSynth to control the volume. Same as the previous assignment, I had mouseX tied to Q and the circle size, and mouseY to decay and circle density, but instead of moving mouse to control, I changed it to mouse drag because later I created some sliders for other parameters, changing slider value would interfere with Q and decay as the whole window is my sketch. However, I noticed that when my mouse is dragged over the slider, it would also change my Q and decay value, I want each parameters to be controlled independently from each other, so my solution was to confine the mouse drag for Q and decay within a square frame with the same center as the circle.

I had the square as a visual reference for manipulating the MonoSynth, but it was static and I wanted to animate it somehow to have it reactive to some sound parameter, so I thought about adding a hi-hat layer to my composition to make it sound richer. I tried to create a NoiseSynth for hi-hat but however I changed the ADSR values around, I could never achieve the effect I wanted. Sometimes I would hear the trigger and sometimes it was straight up noise, and it sounded very mechanic, not the hi-hat that I wanted. Instead of using a NoiseSynth, I decided to just use a hi-hat sample and trigger it every "4n" with Tone.event and a playHihat callback function. I started the event at "0:0:1" so the hi-hat is on the backbeat. As I was telling my classmate Max that I was not sure if there is a way to animate the square rhythmically with the hi-hat without manually calibrating the values, which was how I matched the bpm with pumping size of the circle in my original assignment, Max showed me his project 33 Null made with Tone.js, where he used Part.progress to access the progression of an event with a value between 0 - 1, which he then used to synch his animation with the sound user triggers. I was so excited to find out about the trick. I had my circle scaler and square scaler mapped to kickLoop.progress and hihatEvent.progress, so that the pumping circle and square are more precisely controlled by BPM/kick drum/hi-hat, therefore to create a more compelling visual effect. I also imagined changing the closed hi-hat into an open hi-hat with another slider. I tried different effects on the hi-hat sample and ended up using a JCReverb. I wanted to create the tracing circle effect Max did in his project on my square frame when Reverb is applied, but for some reason I couldn't apply it to my sketch. So I came across Shiffman's tutorial on drawing object trails for my square frame. I created a Frame class, and I had rectTrails mapped to the hihat slider value which also controls the reverb, and had rectTrails to constrain the number of trails generated (frame.history.length).

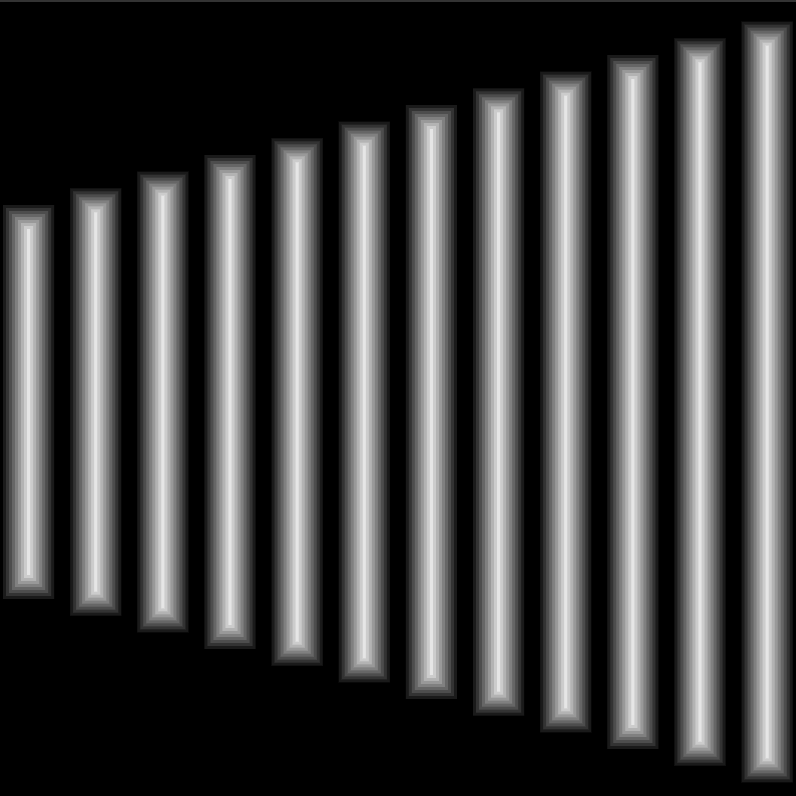

During my experimentation with synth, I liked the fmSynth sound a lot, and decided to add another layer of low pitch synth sound to fill the depth of the overall composition. I wanted to generate a random fmSynth note by keyPressed from the randomized MonoSynth note patch so it doesn't sound too dissonant, but I also wanted to exclude the rest "0" from synthPart._events[].value, so that every time key is pressed there is note being played instead of silence. I did some research on how to generate one random index from an array but exclude one. And I saw this example . So I created a function pickFmNote() that returns to itself to generate a new note until it is not "0". I wanted to use FFT/ waveform to visualize the fmSynth going across the canvas. I referred to the source code for this analyzer example, and I was having a lot of trouble connecting my fmSynth to Tone.Waveform. I referred to another envelope example that draws kick wave live from kickEnevelope. I viewed the took the block of code from the source code where the kick wave was drawn and replaced kickEnvelope with fmSynth.envelope.value. The result did not look the same as the example but I was quite surprised to see the effect of dispersing dots when fmSynth was triggered and converged back to a straight line when it was released, so I decided to just go with this accidental outcome.

There is only limited amount of things I could achieve in a week. The more I expand on my project, the more ideas start to emerge and the more I feel compelled to improve it and add more features. Right now I have a finished audio-visual instrument, where users can create their own composition. However, I would take this project to the next step by refining the sound quality, experiment with the envelope parameters and effects more to make each layer sound more compatible with each other, and overall less cheesy and mechanic, as well as adding more controls to turn each layer on and off to generate more variety of layer combinations.

my code: https://editor.p5js.org/ada10086/sketches/rJ2k48z3X

to play: https://editor.p5js.org/ada10086/full/rJ2k48z3X

instructions:

click the randomize button to generate a set of randomized monosynth melody

drag bpm slider to change the speed of composition

drag the hihat slider to add reverb to hihat

drag the kick slider to filter out kick drum

press key 'a' to play an fm synth generated randomly from the monosynth notes set.

Demo1:

https://youtu.be/zmlSP3IMHuk

Demo 2:

https://youtu.be/eZYGB9h9isY